From TechCrunch: "XNOR.ai frees AI from the prison of the supercomputer"

"When someone talks about AI, or machine learning, or deep convolutional networks, what they’re really talking about - as is the case for so many computing concepts - is a lot of carefully manicured math.The Allen Institute for Artificial Intelligence has a new idea.

At the heart of these versatile and powerful networks is a volume of calculation only achievable by the equivalent of supercomputers. More than anything else, this computational cost is what is holding back applying AI in devices of comparatively little brain: phones, embedded sensors, cameras. ...

Machine learning, Farhadi continued, tends to rely on convolutional neural networks (CNN); these involve repeatedly performing simple but extremely numerous operations on good-sized matrices of numbers. But because of the nature of the operations, many have to be performed serially rather than in parallel. ...

It’s the unfortunate reality of both training and running the machine learning systems performing all these interesting feats of AI that they feature phenomenally computationally expensive processes.

“It’s hard to scale when you need that much processing power,” Farhadi said. Even if you could fit the “beefy” - his preferred epithet for the GPU-packed servers and workstations to which machine learning models are restricted - specs into a phone, it would suck the battery dry in a minute.

Meanwhile, the accepted workaround is almost comically clumsy when you think about it: You take a load of data you want to analyze, send it over the internet to a data center where the AI actually lives and computers perhaps a thousand miles away work at top speed to calculate the result, hopefully getting back to you within a second or two."

"It’s not such a problem if you don’t need that result right away, but imagine if you had to do that in order to play a game on the highest graphical settings; you want to get those video frames up ASAP, and it’s impractical (not to mention inelegant) to send them off to be resolved remotely.And they have something which works, at least in prototype.

But improvements to both software and hardware have made it unnecessary, and our ray-traced shadows and normal maps are applied without resorting to distant data centers.

Farhadi and his team wanted to make this possible for more sophisticated AI models. But how could they cut the time required to do billions of serial operations?

“We decided to binarize the hell out of it,” he said. By simplifying the mathematical operations to rough equivalents in binary operations, they could increase the speed and efficiency with which AI models can be run by several orders of magnitude.

Here’s why. Even the simplest arithmetic problem involves a great deal of fundamental context, because transistors don’t natively understand numbers — only on and off states. Six minus four is certainly two, but in order to arrive at that, you must define six, four, two and all the numbers in between, what minus means, how to check the work to make sure it’s correct, and so on. It requires quite a bit of logic, literally, to be able to arrive at this simple result.

But chips do have some built-in capabilities, notably a set of simple operations known as logic gates. One gate might take an input, 1 (at this scale, it’s not actually a number but a voltage), and output a 0, or vice versa. That would be a simple NOT gate, also known as an inverter. Or of two inputs, if either is a 1, it outputs a 1 — but if neither or both is a 1, it outputs a 0. That’s an XOR gate.

These simple operations are carried out at the transistor level and as such are very fast. In fact, they’re pretty much the fastest calculations a computer can do, and it happens that huge arrays of numbers can be subjected to this kind of logic at once, even on ordinary processors.

The problem is, it’s not easy to frame complex math in terms that can be resolved by logic gates alone. And it’s harder still to create an algorithm that converts mathematical operations to binary ones. But that’s exactly what the AI2 engineers did."

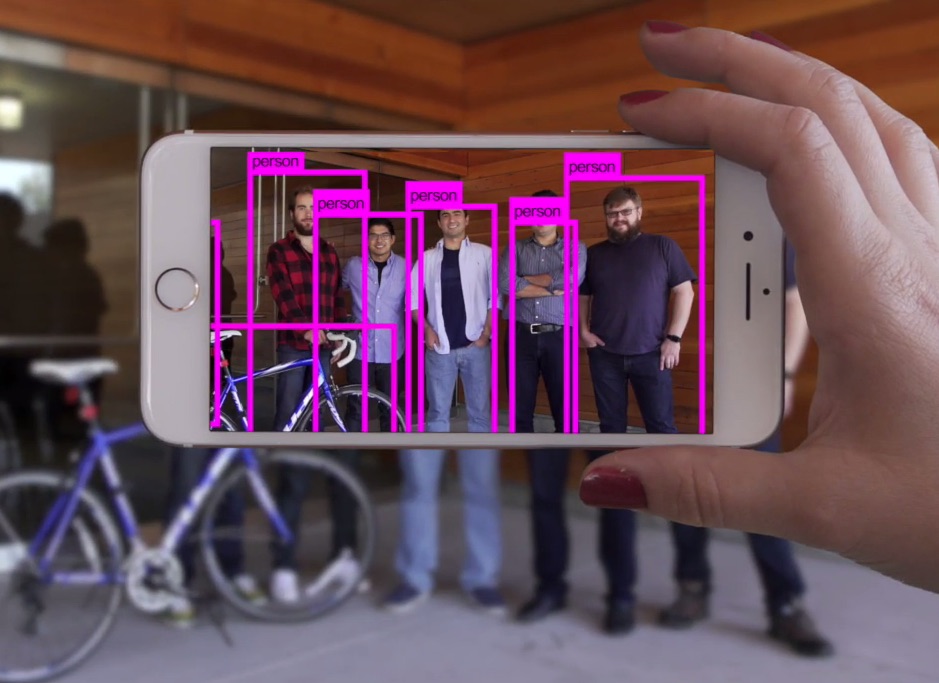

"Farhadi showed me the fruits of their labor by opening an app on his phone and pointing it out the window. The view of the Fremont cut outside was instantly overlaid with boxes dancing over various objects: boat, car, phone, their labels read.I still remember programming in IBM System/360 Assembler. I later discovered that every assembly language I had ever used was itself further compiled to/interpreted by microcode.

In a way it was underwhelming: after all, this kind of thing is what we see all the time in blog posts touting the latest in computer vision.

But those results are achieved with the benefit of supercomputers and parallelized GPUs; who knows how long it takes a state of the art algorithm to look at an image and say, “there are six boats, two cars, a phone and a bush,” as well as label their boundaries.

After all, it not only has to go over the whole scene pixel by pixel, but identify discrete objects within it and their edges, compare those to known shapes, and so on; even rudimentary object recognition is a surprisingly complex task for computer vision systems.

This prototype app, running on an everyday smartphone, was doing it 10 times a second."

Matryoshka.

Their paper, "XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks", shows that they're using CNN algorithms relying upon fast CPU bit-operators such as 'XNOR and bit-counting operations'. A spin-off company is productising this, XNOR.ai.

Can't get simpler than that .. until quantum computing.

No comments:

Post a Comment

Comments are moderated. Keep it polite and no gratuitous links to your business website - we're not a billboard here.